In the world of computers, the central processing unit (CPU) is–well–central. Your first computer course probably explained it like the brain of the computer. However, sometimes you can overload that brain and CPU designers are always trying to improve both speed and throughput using a variety of techniques. One of those methods is DMA or direct memory access.

As the name implies, DMA is the ability for an I/O device to transfer data directly to or from memory. In some cases, it might actually transfer data to another device, but not all DMA systems support that. Sounds simple, but the devil is in the details. There’s a lot of information in this introduction to DMA by [Andrei Chichak]. It covers different types of DMA and the tradeoffs involved in each one.

DMA is especially useful for transferring blocks of data (for example, data from a disk drive, audio, or video data) at high speeds. It is also useful for slow data (like UARTs) so that the CPU doesn’t have to block itself waiting for a slow I/O device. In the old days, sometimes the processor wasn’t fast enough to read a fast stream, but today it is likely that the processor is super fast. You just don’t want to tie it up with a slow I/O device. But that has changed how DMA architectures work over time. Usually, when a block transfer completes, the CPU gets a single interrupt so it can process the incoming data or queue up more data to send to the device.

The primary way to differentiate DMA schemes is what happens to the processor while the memory is in use by another device. An older processor is likely to use block mode where the processor simply stalls while the memory is in use. That makes sense because the I/O device is probably faster than the CPU anyway so the loss in terms of executed instructions will be small.

With faster processors, burst mode DMA is popular because it will limit how long the CPU is paused. In fact, many modern burst controllers will try to wait until the CPU is not using memory anyway and only stall if the CPU tries to use memory during the brief transfer.

Some processors and DMA systems can figure out when the CPU will not be using memory for a bit and do transfers totally during that time. This is usually known as transparent mode.

You might think that DMA is for “big computers,” and certainly, [Andrei’s] article centers on PC’s, SPARC, and Atmel SAM devices. However, block mode DMA on an 8085 (with an external controller) is also mentioned. We also remember that the RCA 1802 had DMA on the CPU which was amazing in its day. That DMA is what made a simple front panel possible for the ELF computers and a cool (for its day) video graphics chip. Admittedly, if you are writing with a modern operating system and you aren’t writing device drivers, you probably don’t need to use DMA. But for real-time systems you can easily analyze, DMA can be both a great simplification and a boost to overall system throughput.

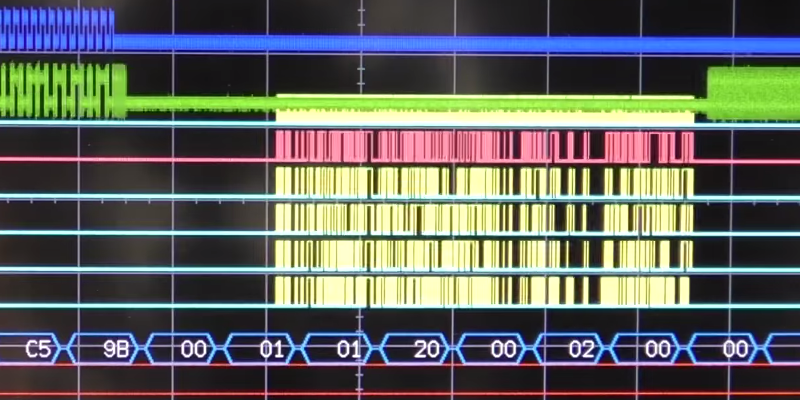

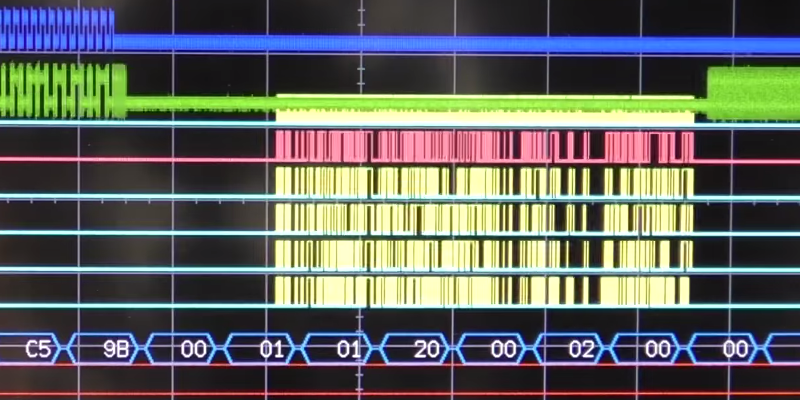

While this intro has a lot of background, it doesn’t show any real concrete examples. If you want to see [Mike Harrison’s] practical results of using DMA for SPI on a PIC32, be sure you read our post on that from last year.

DMA on dsPIC33 microcontrollers is pretty handy. The one I’m using has dual-port SRAM, so the CPU and DMA can both access RAM at the same time. I use it to keep my DAC buffers full of samples and to grab ADC samples and stuff them in a buffer automatically

Alan Hightower says:DMA got it’s name from the original timing scheme where a read cycle from the I/O device via DMA request line presented data on a shared bus while a simultaneous write strobe assertion to a memory device stored that shared data bus value to a memory location – or vice-versa. There was never an intermediate storage step like a FIFO or CPU register – hence ‘Direct Memory Access’. Today DMA is used very loosely to describe any bus mastering peripheral which includes any secondary co-processor that handles de-coupled data movement. For your SPI, UART, etc, examples, readers should beware a DMA scheme could add additional latency as data is unavailable until the programmed transfer length is done. And it may even be less efficient overall for small transfer units due to setup overhead. I’ve had many junior engineers suggest a DMA I/O solution without working through the timing or framing issues.

Hmm says:“I’ve had many junior engineers suggest a DMA I/O solution” Understandably, for some uC’s (ARM?), it’s touted or inferred as a secondary, dumb thread – just set it up to free-run and set a flag/interrupt on completion.

Unferium says:With the X86/64 CPU having levels of cache and a RAM-Controller (was in the northbridge, now integrated):

I’d thought the device-wanting-DMA could send an interrupt (Or something) to the CPU/Core for DMA access between device addresses and the CPU only needs to set that connection up before continuing execution of the local caches until a completed-DMA Interrupt was caught? Wouldn’t that be the minimum of involvement in the DMA transfer?

Wouldn’t it be up to micro-code in the memory controller to keep track of memory protection to prevent code/data mix-up? I know very little about this area of technology (Relative to those whom, say, program/design these systems) with sheer metric and imperial tonnes more questions (Mostly regards x86/x86-64)… But I have some ideas/speculation/simulative-thinking… Just quite a lot of uncertainty with the validity and/or plausibility of my knowledge (Lack there-of)

Modern systems with the memory controller integrated into the CPU actually caused a slight redefinition of the term DMA. It originally was defined as peripherals accessing RAM bypassing the CPU. That doesn’t work in modern systems when the only way to the RAM is through the CPU. It does bypass the CPU *cores*, but not the CPU itself.

Unferium says:Thanks, partially answers one of the questions….however: Depends on the definition of the CPU:

either physical: as in one package, or metaphysical: as in the CPU is a part of the silicon package that also houses a northbridge/memory controller and peripherals and thus can still be thought of as seperate things. Gonna read more comments below…. Still: thanks anyway. :)

A basic DMA transfer works a bit like this: the CPU sets up a transfer by telling the DMA controller what address to start reading at, what address to start writing at, and how much data to copy. Once the DMA controller has this information it begins copying data while the CPU continues on its merry way. When the transfer is complete the DMA controller sends the CPU an interrupt to signal it has finished.